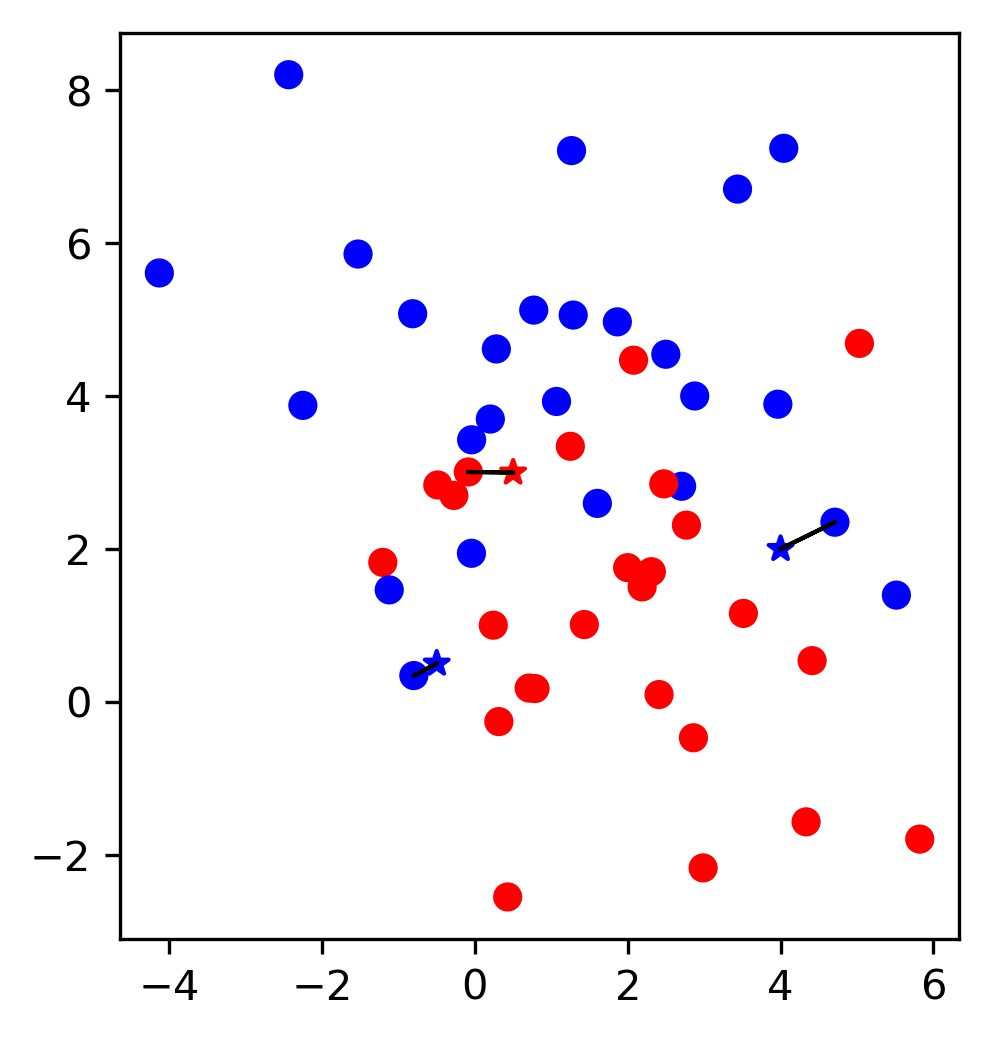

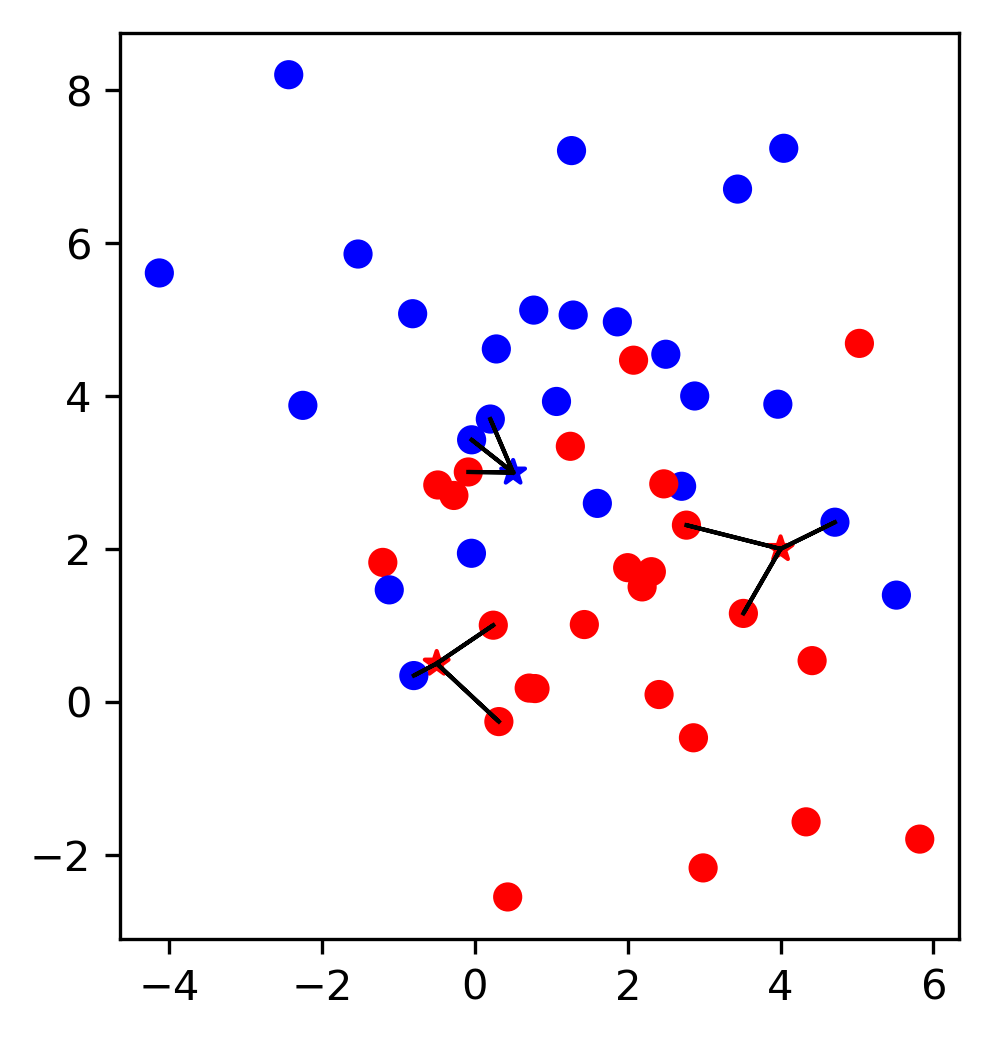

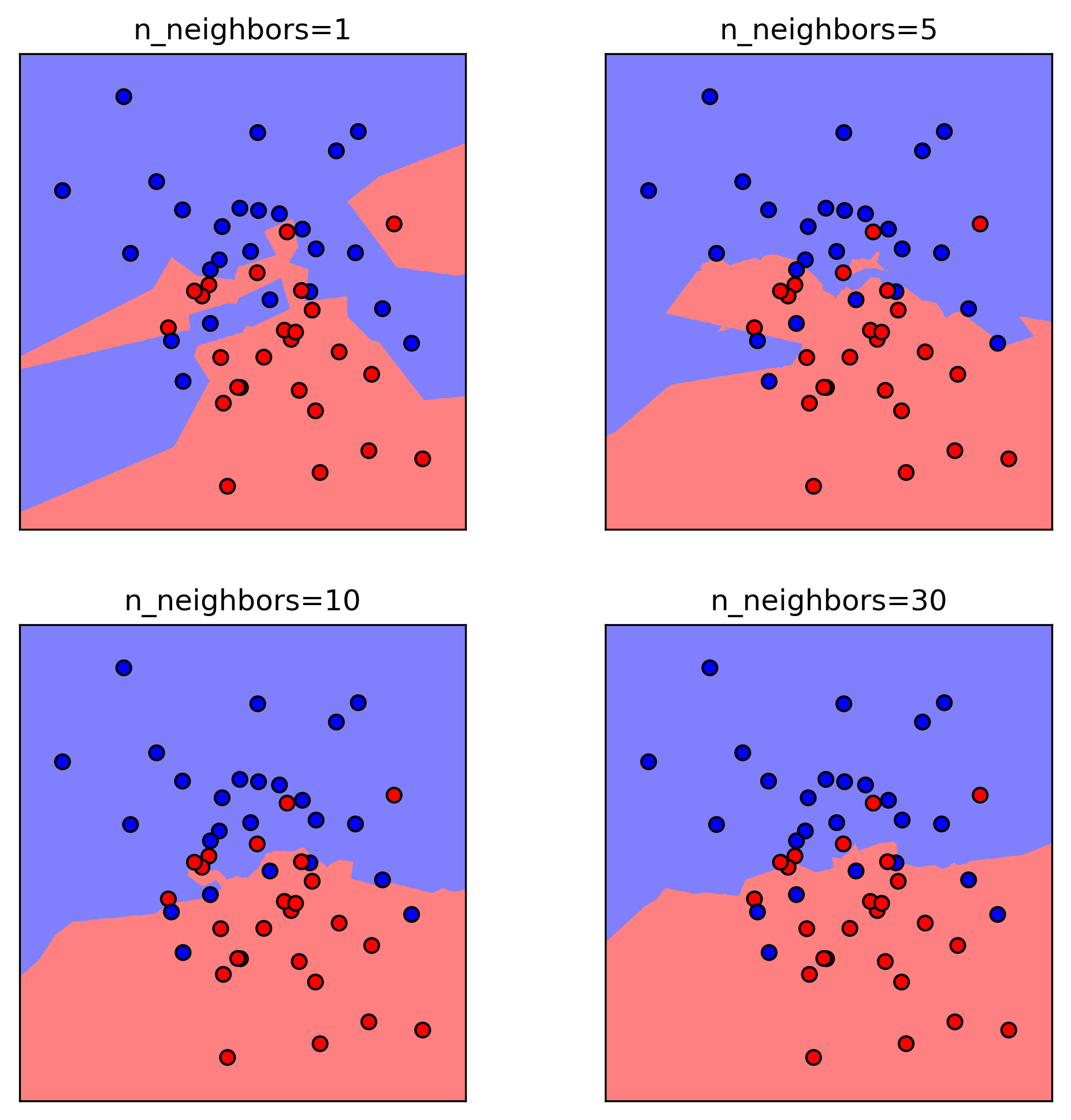

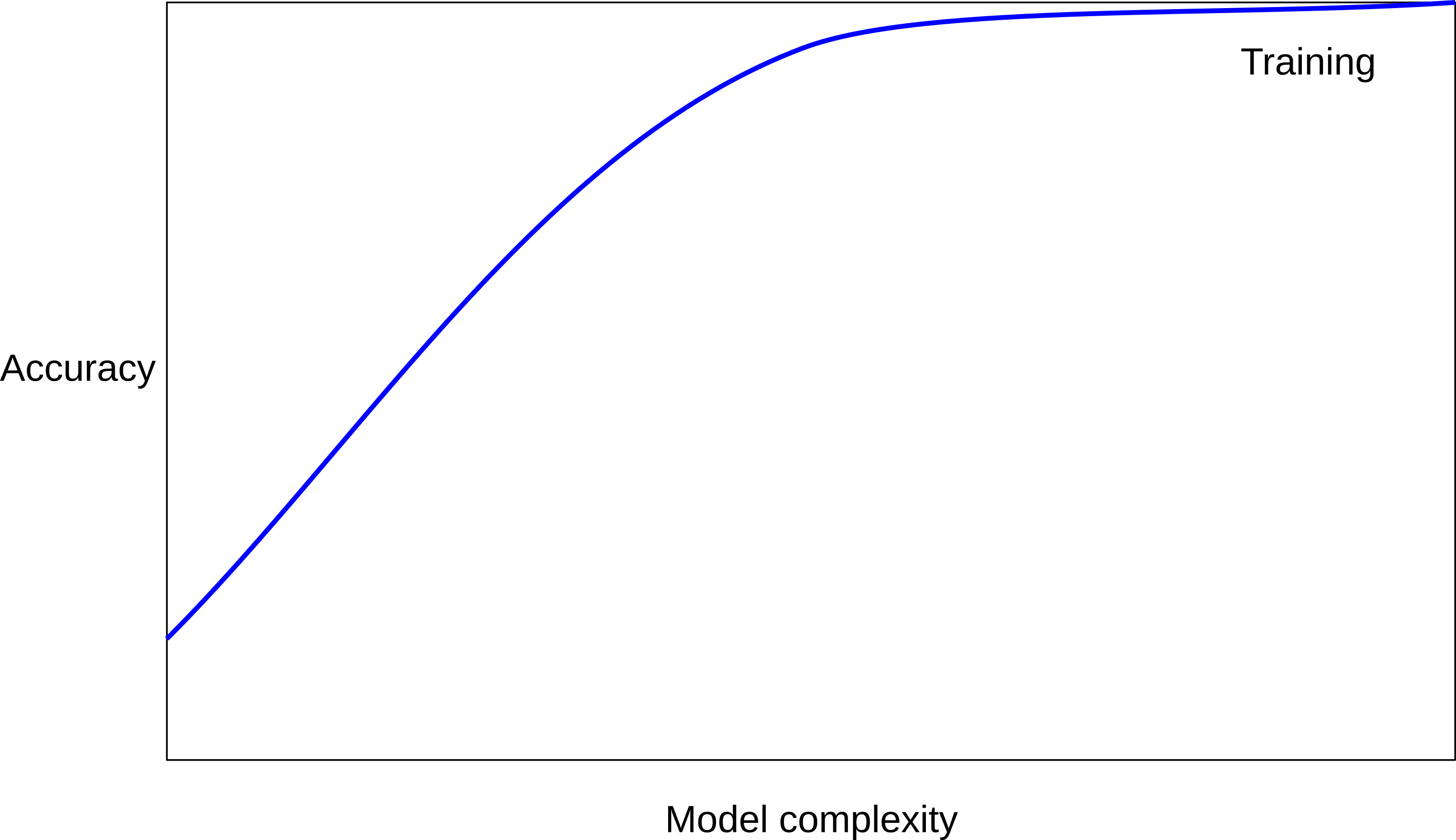

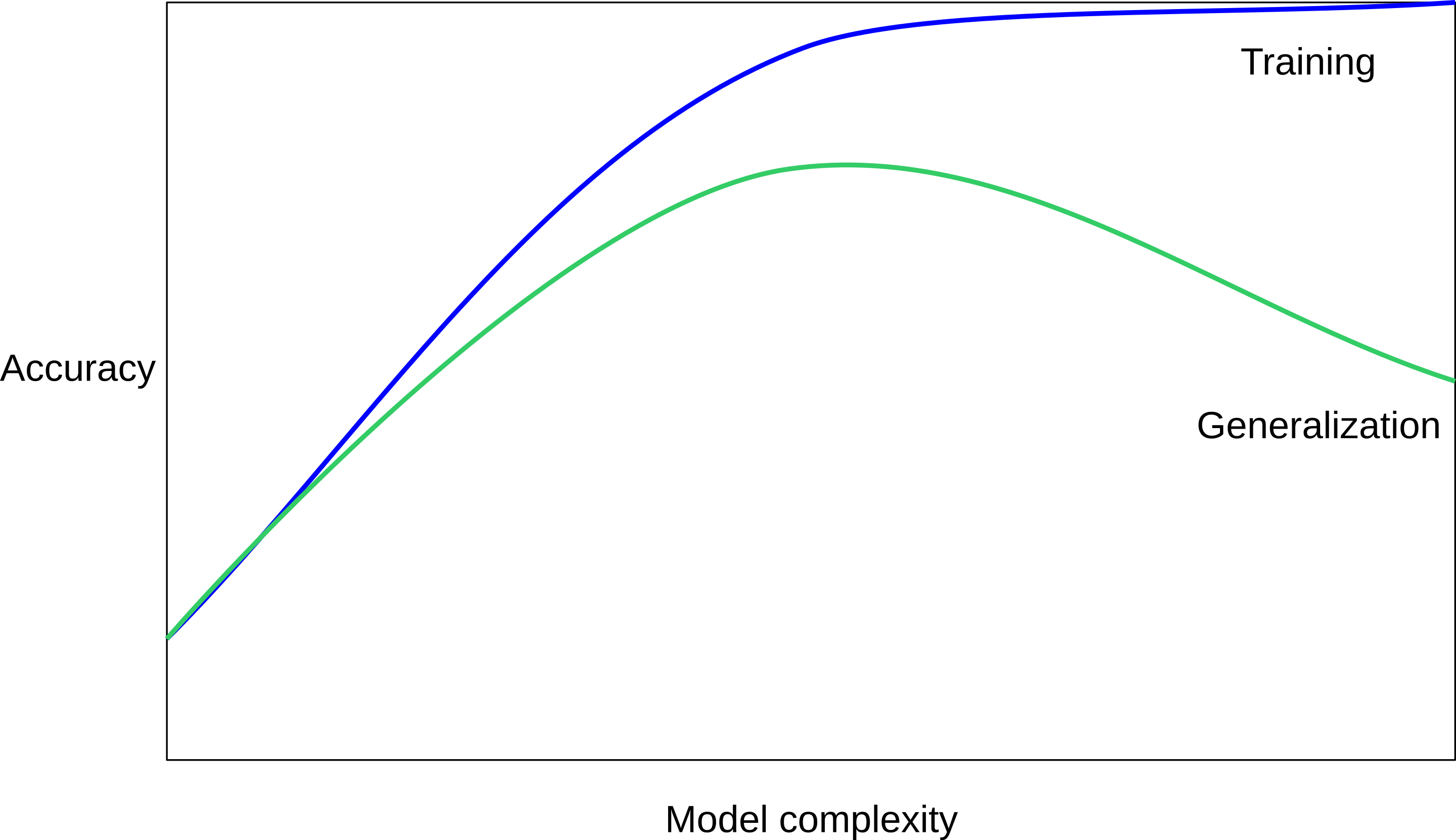

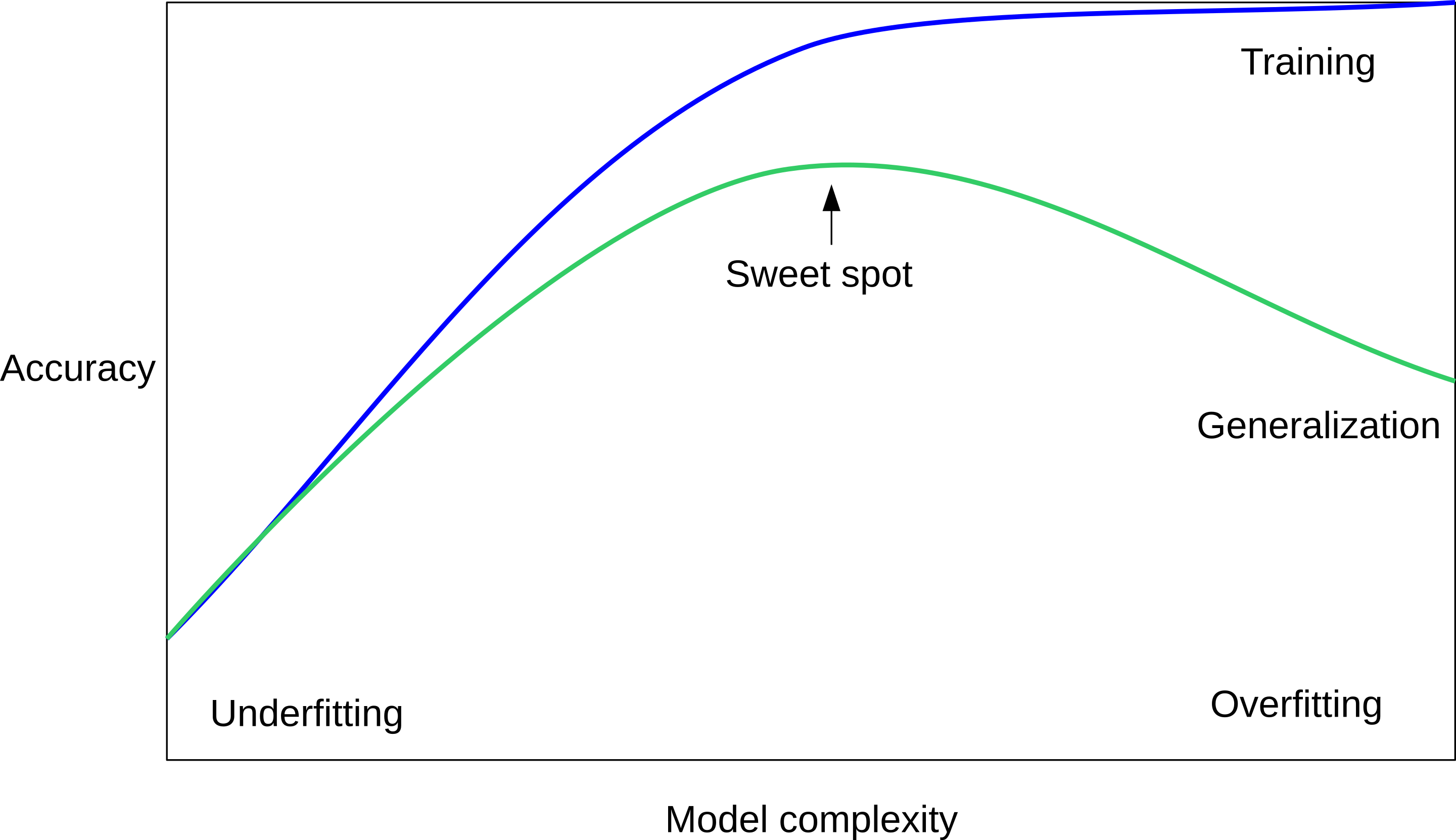

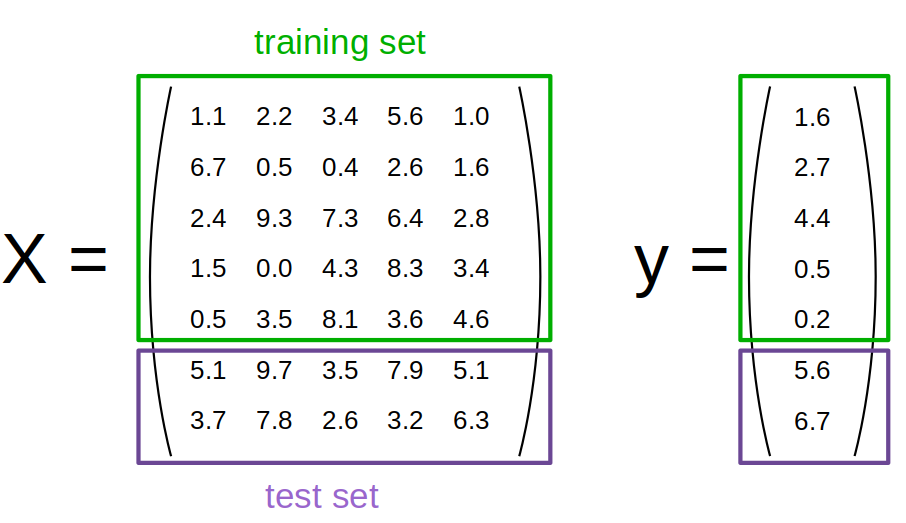

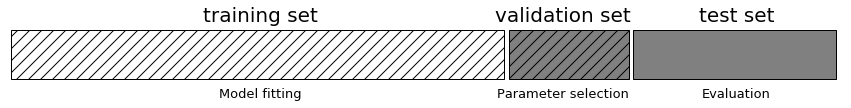

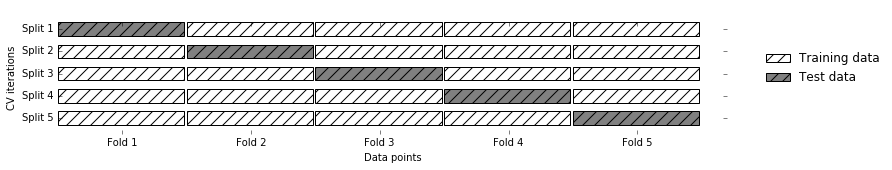

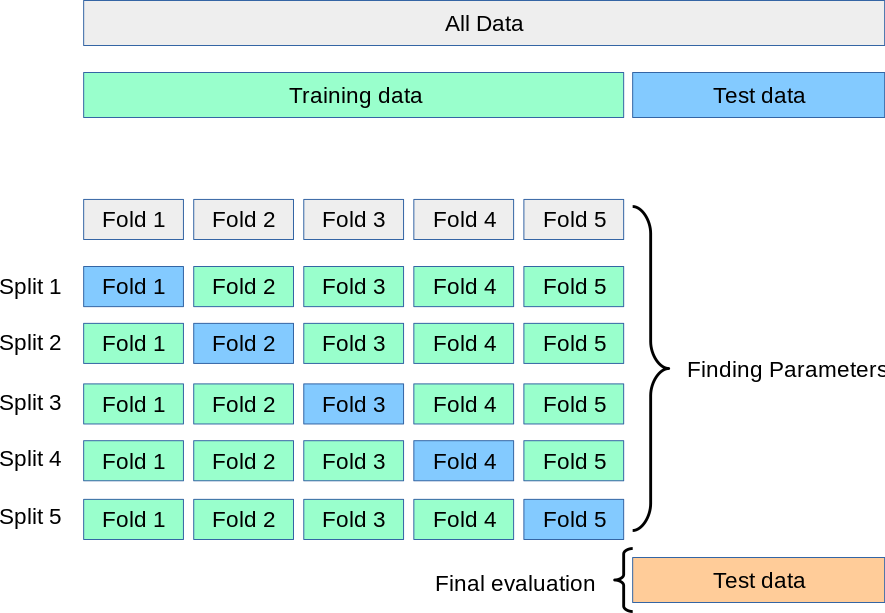

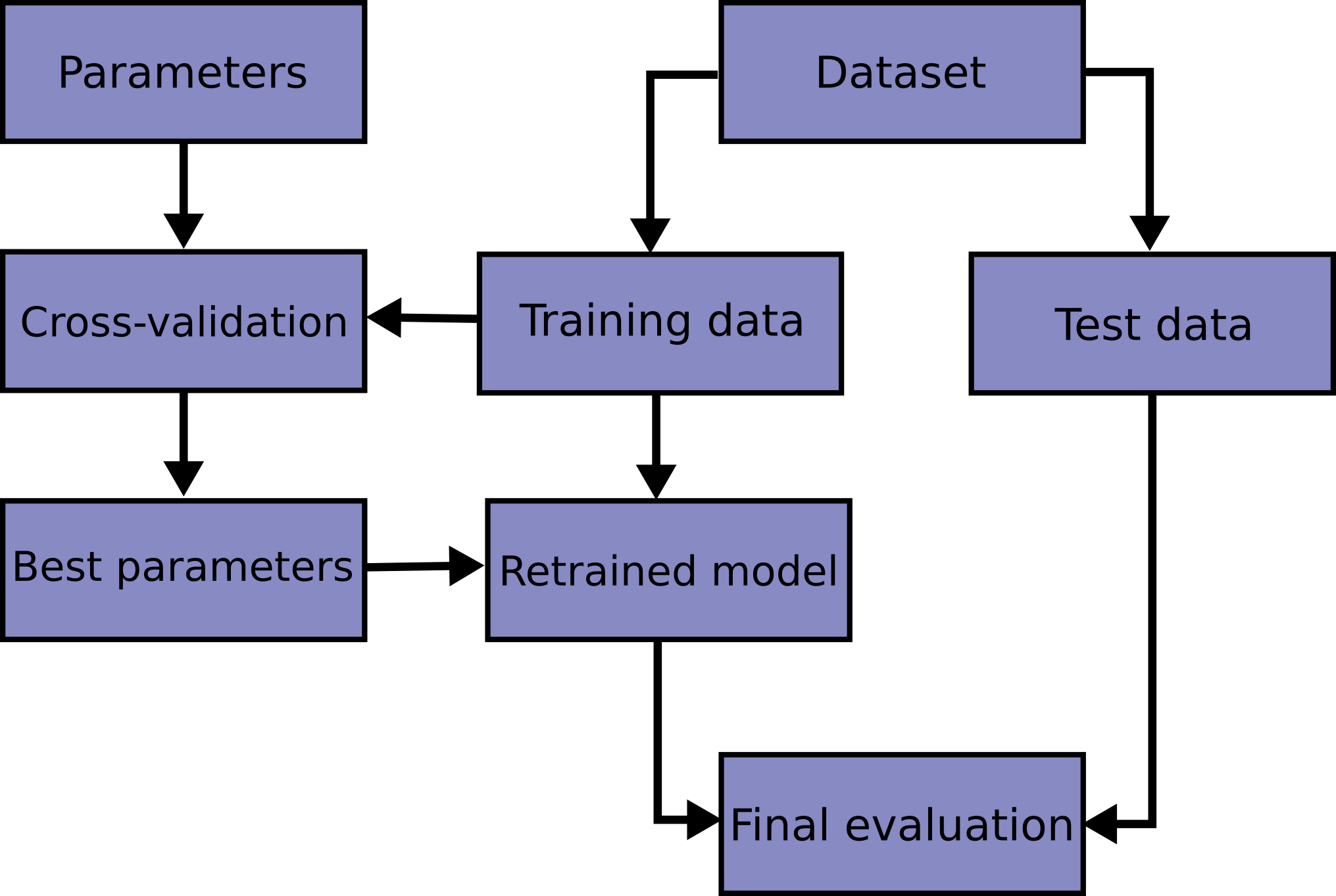

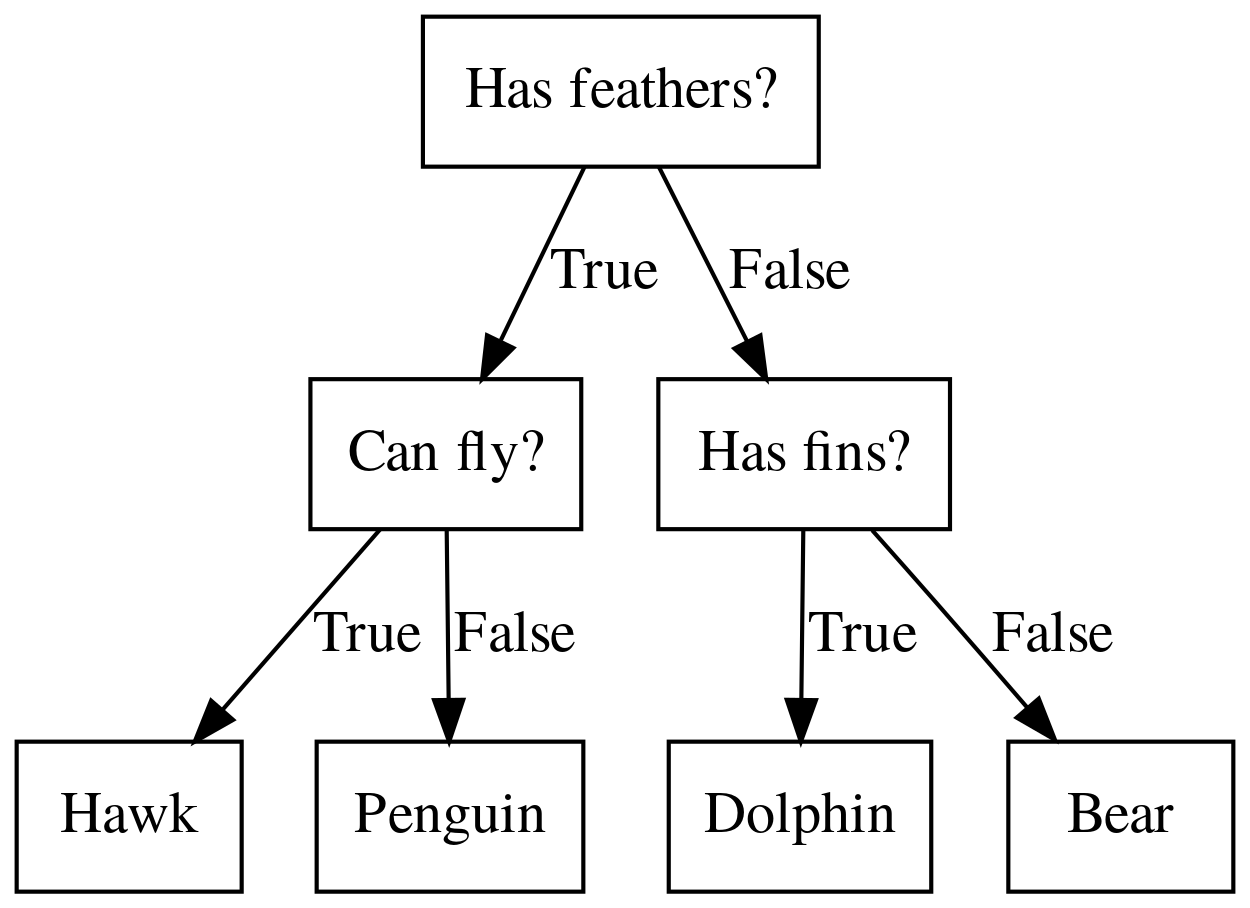

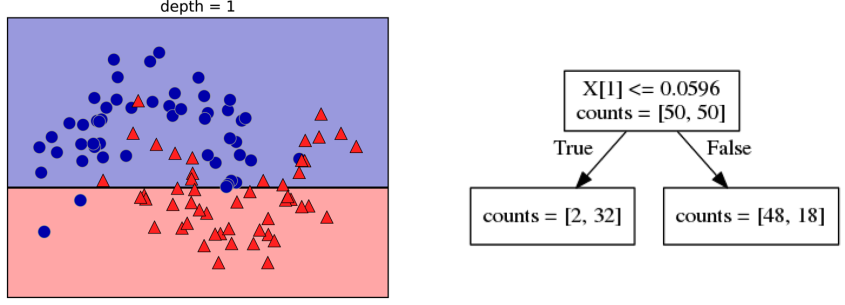

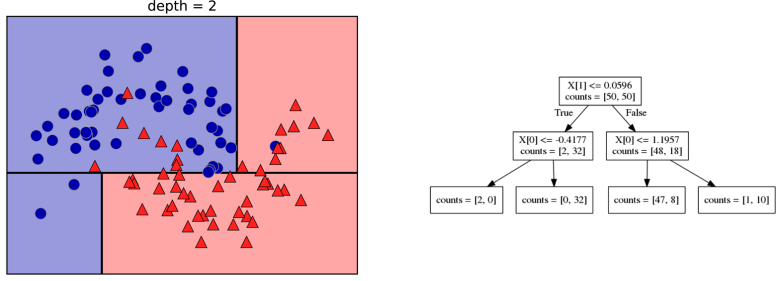

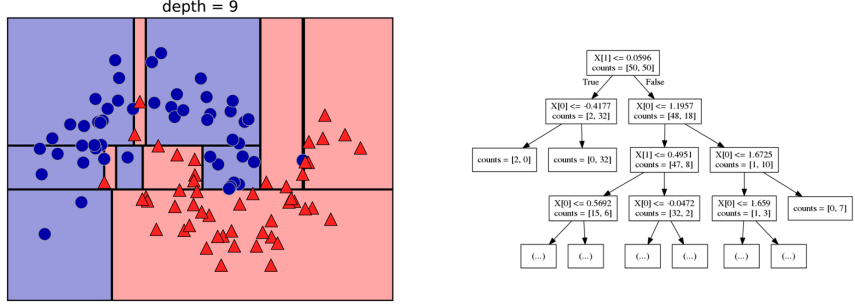

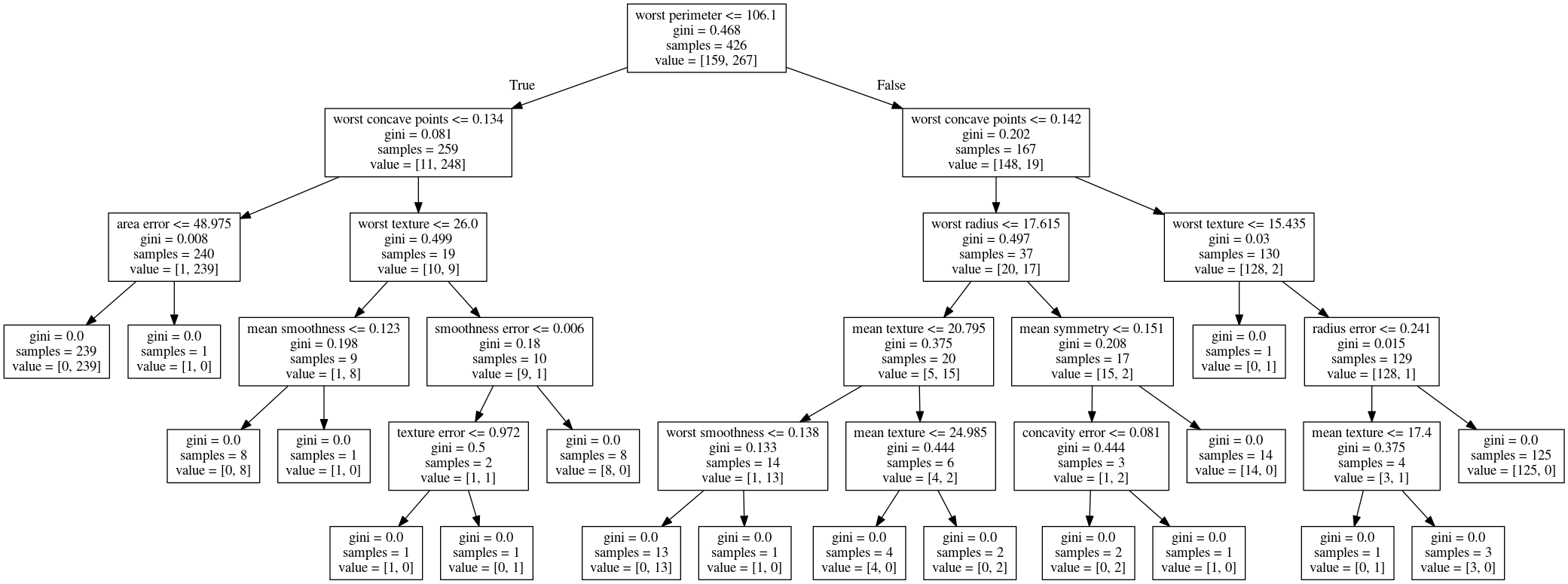

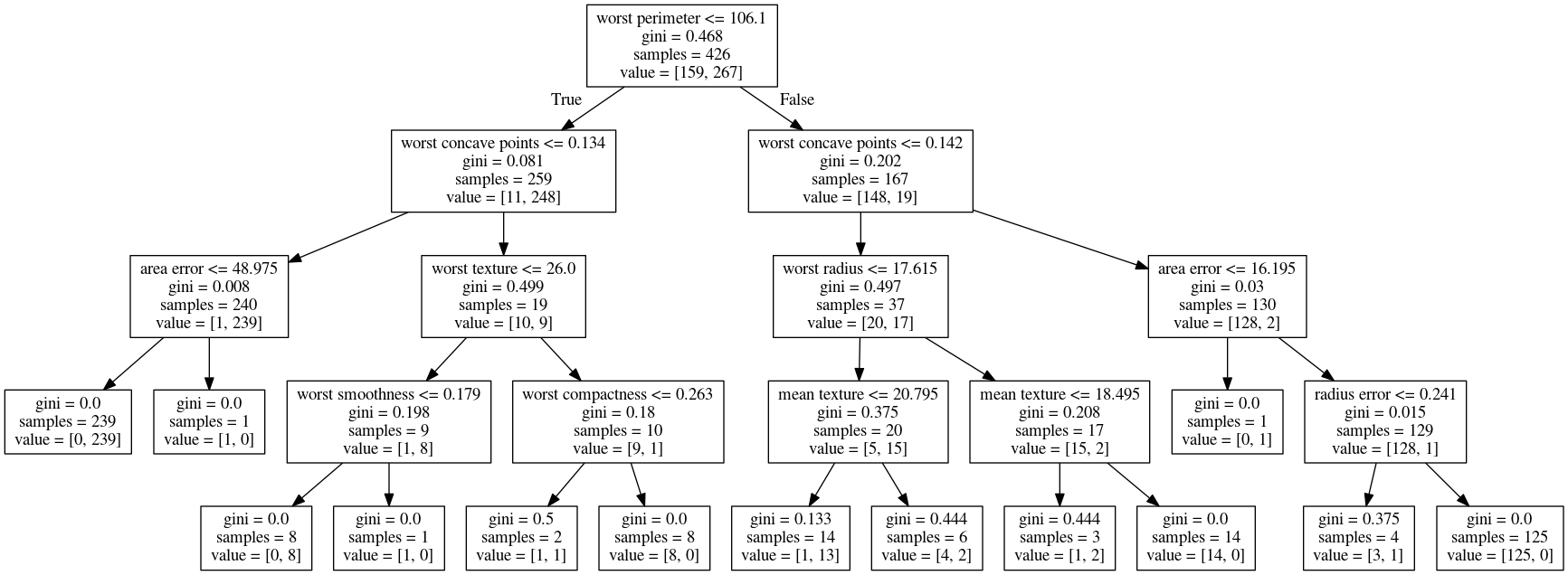

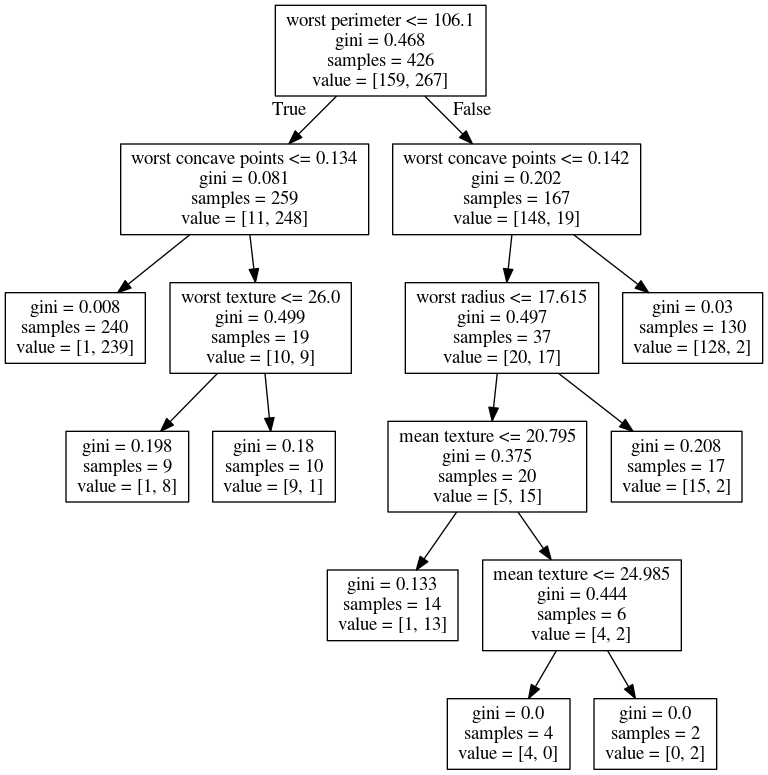

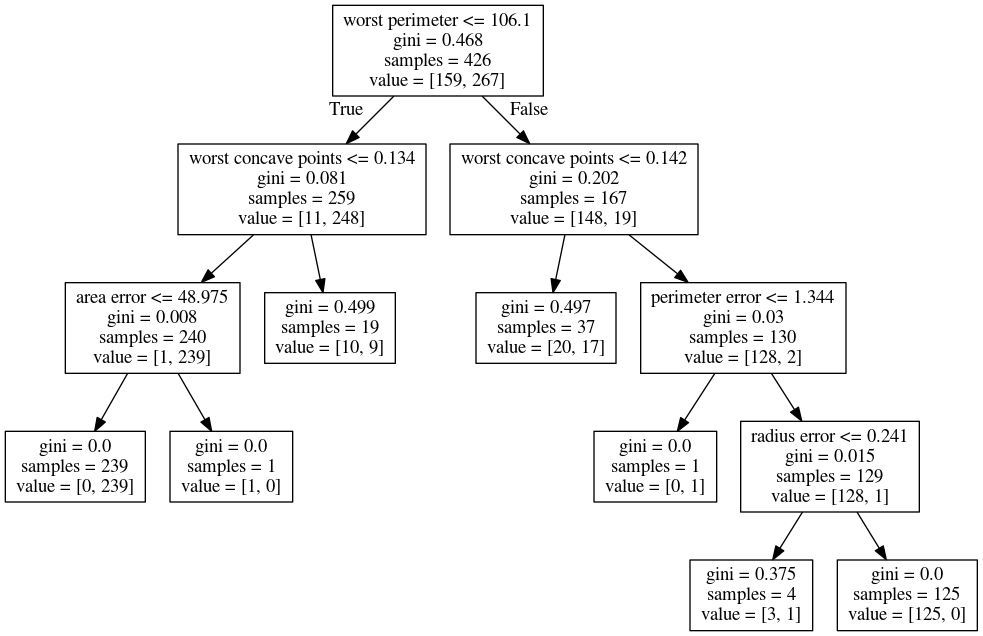

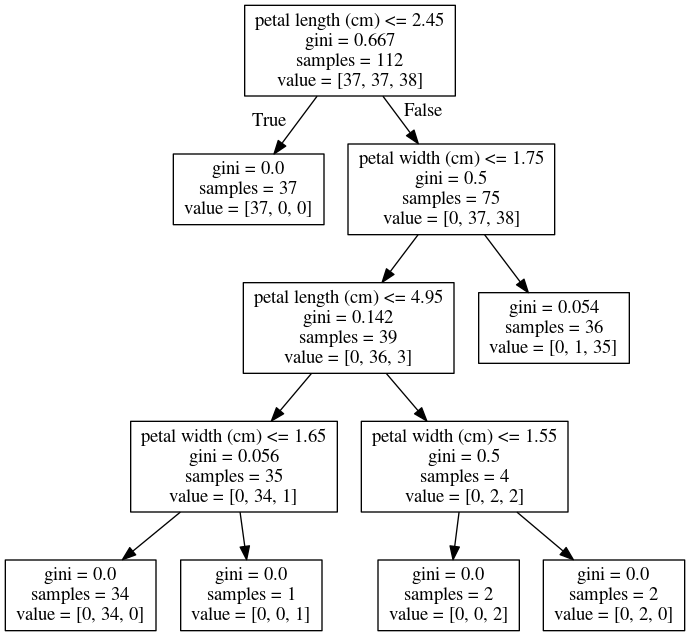

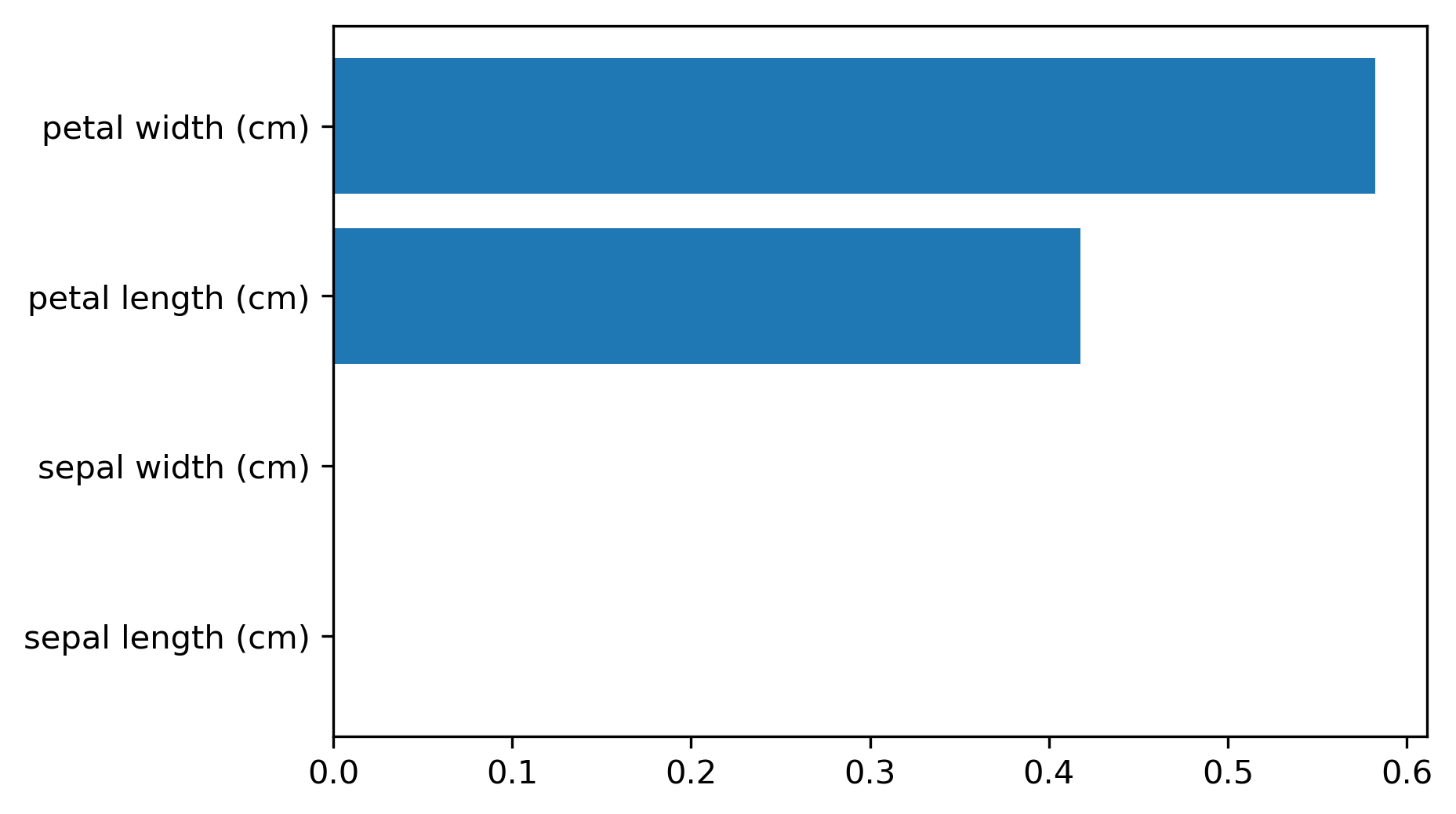

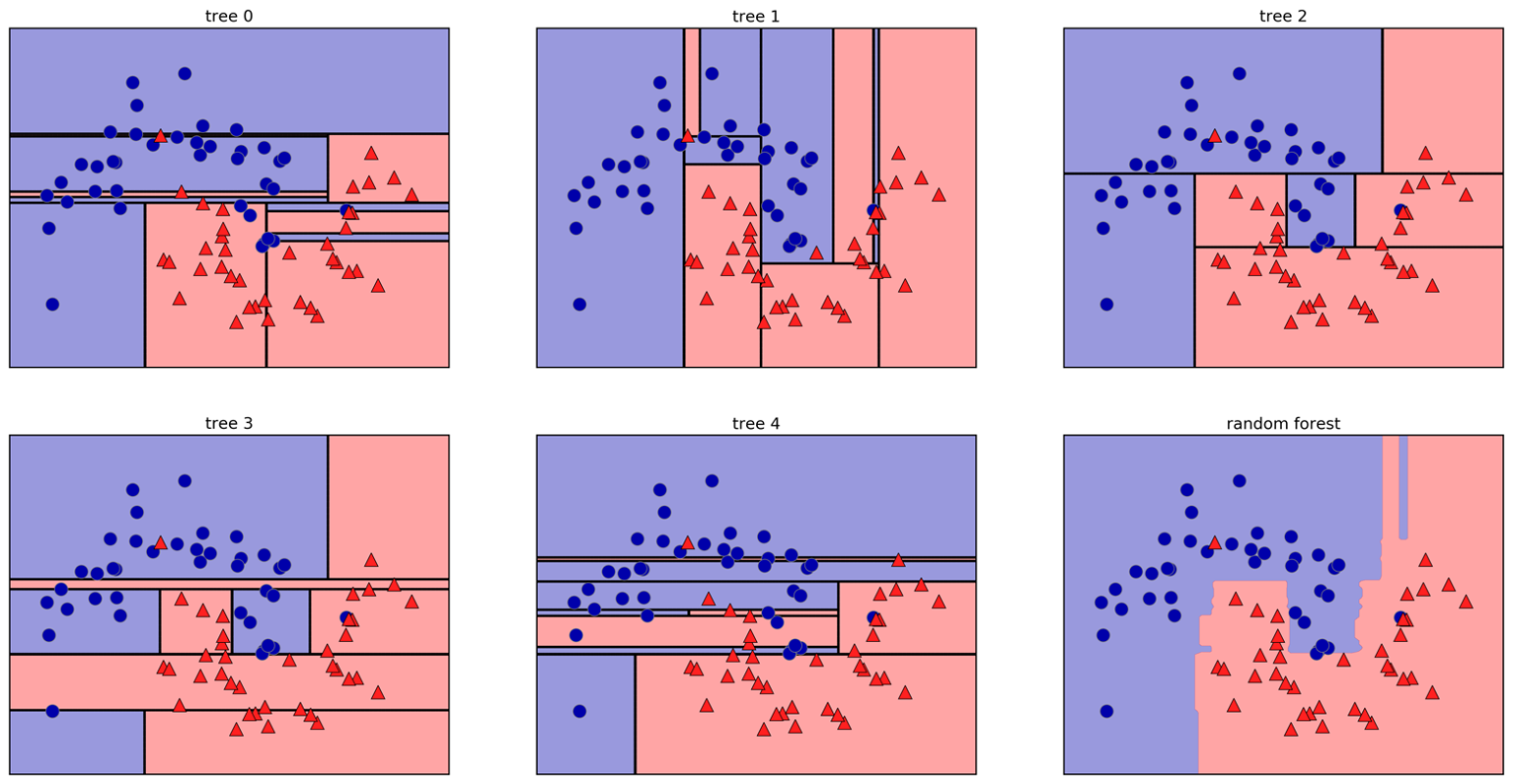

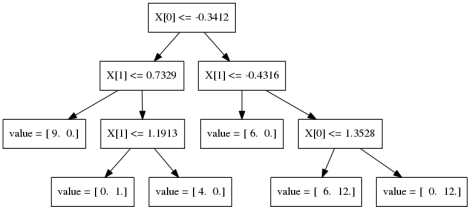

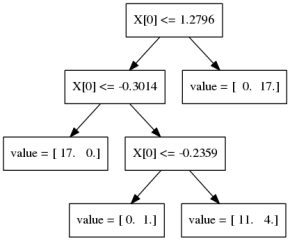

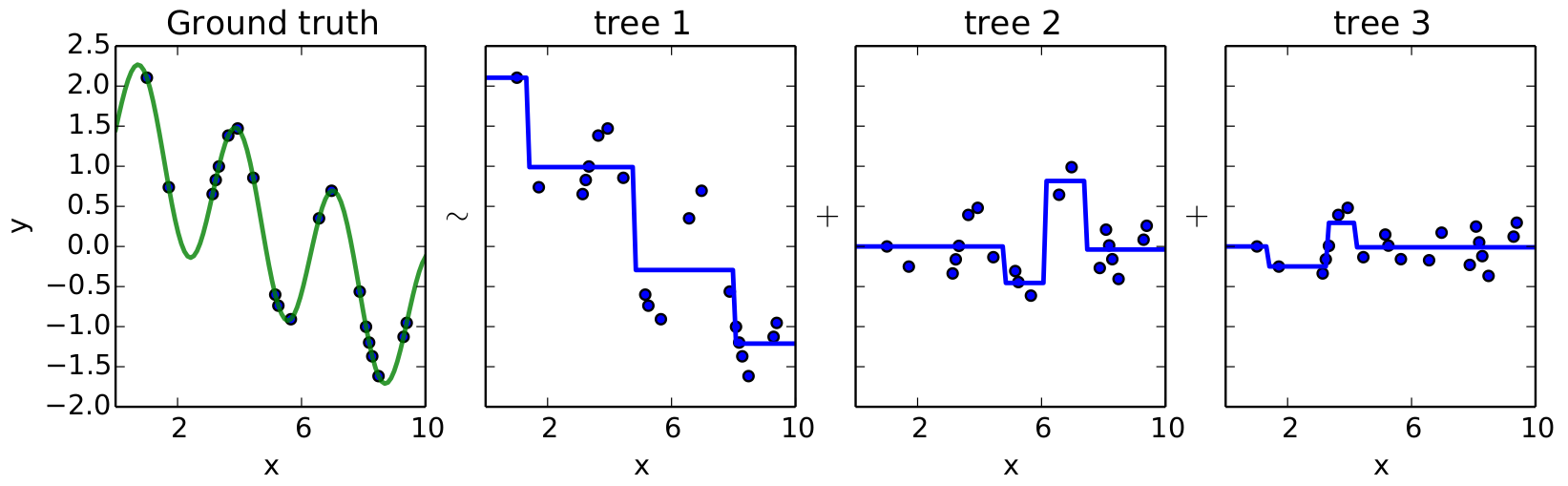

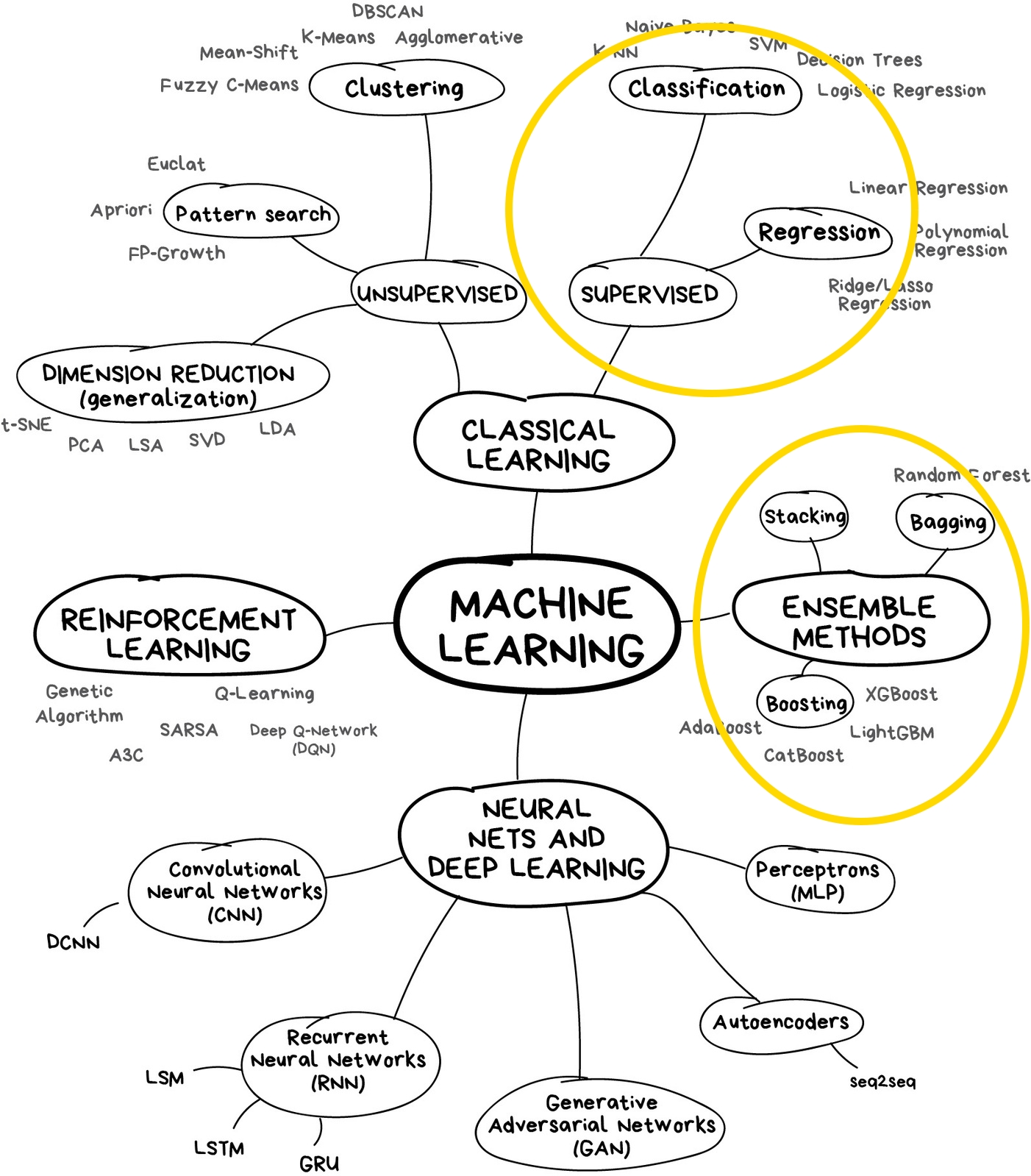

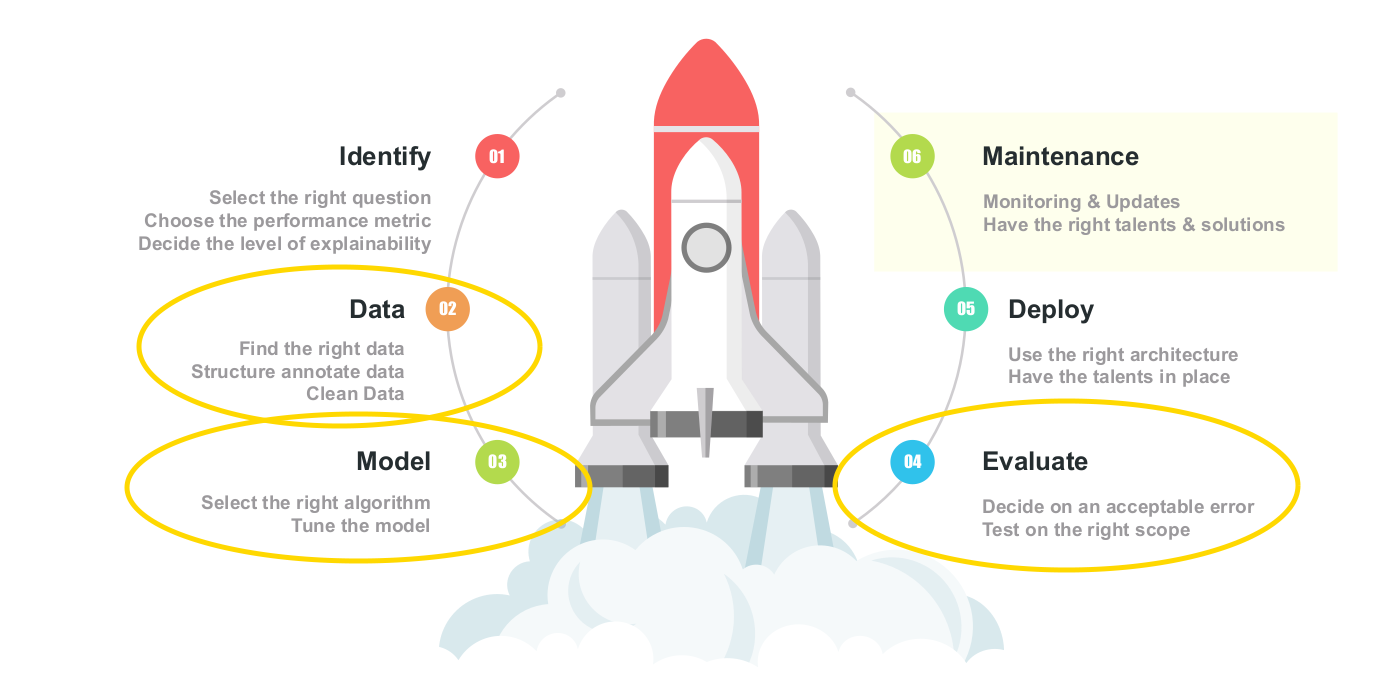

class: middle, center, title-slide .center[.width-60[]] # AI Black Belt - Yellow Day 4/4: Learn how to let the machine tune itself <br><br><br> --- class: middle ## Outline .inactive[[Day 1] Introduction to machine learning with Python] .inactive[[Day 2] Learn to identify and solve supervised learning problems] .inactive[[Day 3] Practice regression algorithms for sentiment analysis] [Day 4] Learn how to let the machine tune itself - Learn how to automatically tune and evaluate your models. - Get familiar with intermediate ML algorithms. - Learn how to structure your ML pipeline with Scikit-Learn. --- class: middle # Model selection and evaluation --- # Effect of `n_neighbors` <br> .center.width-50[] .center[`n_neighbors=1`] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- count: false # Effect of `n_neighbors` <br> .center.width-50[] .center[`n_neighbors=3`] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle .center.width-60[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- # Model complexity .center.width-100[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- # Overfitting and underfitting <br> .center.width-100[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- count: false # Overfitting and underfitting <br> .center.width-100[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- count: false # Overfitting and underfitting <br> .center.width-100[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- # Model selection and evaluation .center.width-80[] .exercice[How to do both hyper-parameter tuning and model evaluation?] --- class: middle ## Threefold split .center.width-100[] - pro: fast, simple - con: high variance, bad use of data .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle ## Cross-validation .center.width-100[] - pro: more stable, more data - con: slower .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle ## Cross-validation + test set .center.width-80[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle ## Grid search .center.width-80[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle Jump to `day4-01-model-selection.ipynb`. [](https://mybinder.org/v2/gh/AI-BlackBelt/yellow/master) --- class: middle # Trees, random forests and boosting --- # Decision trees <br><br> .center.width-60[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle ## Tree construction .center.width-100[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- count: false class: middle ## Tree construction .center.width-100[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- count: false class: middle ## Tree construction .center.width-100[] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle ## Important parameters - `max_depth` - `max_leaf_nodes` - `min_samples_split` - `min_impurity_decrease` --- class: middle .center.width-100[] .center[No pruning] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle .center.width-100[] .center[`max_depth=4`] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle .center.width-60[] .center[`max_leaf_nodes=8`] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle .center.width-100[] .center[`min_samples_split=50`] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle ## Feature importances .grid[ .kol-1-2[.width-100[]] .kol-1-2[.width-100[]] ] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- # Random forests <br> .center.width-100[] .center[Build $T$ randomized trees and average their predictions.] .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle, black-slide .grid[ .kol-1-2[ <br><br><br> ## Condorcet Jury theorem For a jury of $M$ (independent) members, each with a probability $p$ of being right, the probability $\mu$ of the majority decision to be right tends to 1 as $M\to \infty$ if $p>0.5$. ] .kol-1-2[.center.width-90[]] ] --- class: middle ## Important parameters - `n_estimators`: the more, the better - `max_features`: control the amount of randomness --- # Gradient boosting <br><br> $$ \begin{aligned} f\_{1}(x) &\approx y \\\\ f\_{2}(x) &\approx y - f\_{1}(x) \\\\ f\_{3}(x) &\approx y - f\_{1}(x) - f\_{2}(x) \end{aligned}$$ $y \approx$ .width-30[] + .width-30[] + .width-15[] + ... .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- count: false # Gradient boosting <br><br> $$ \begin{aligned} f\_{1}(x) &\approx y \\\\ f\_{2}(x) &\approx y - \gamma f\_{1}(x) \\\\ f\_{3}(x) &\approx y - \gamma f\_{1}(x) - \gamma f\_{2}(x) \end{aligned}$$ $y \approx$ $\gamma$ .width-30[] + $\gamma$ .width-30[] + $\gamma$ .width-15[] + ... $\gamma$ = learning rate .footnote[Credits: Andreas Mueller, [Introduction to Machine Learning with Scikit-Learn](https://github.com/amueller/ml-workshop-2-of-4/), 2019.] --- class: middle .center.width-100[] --- class: middle ## Important parameters - `n_estimators` and `learning_rate` - `max_depth` - `max_features` --- class: middle Jump to `day4-02-forest-boosting.ipynb`. [](https://mybinder.org/v2/gh/AI-BlackBelt/yellow/master) --- class: middle # Yellow belt final test! --- class: middle Jump to `day4-03-yellow-belt-test.ipynb`. [](https://mybinder.org/v2/gh/AI-BlackBelt/yellow/master) --- class: middle # Wrap-up --- class: middle ## Table of contents [Day 1] Introduction to machine learning with Python - Overview of artificial intelligence and machine learning - Get familiar with the data Python ecosystem. - Train your first ML model. [Day 2] Learn to identify and solve supervised learning problems - Learn to recognize classification and regression problems in the wild. - Practice classification algorithms with Scikit-Learn. --- class: middle [Day 3] Practice regression algorithms for sentiment analysis - Learn to evaluate properly the performance of your models. - Practice regression algorithms with Scikit-Learn. - Implement a full ML pipeline: from raw to text to sentiment analysis. [Day 4] Learn how to let the machine tune itself - Learn how to automatically tune and evaluate your models. - Get familiar with intermediate ML algorithms. - Learn how to structure your ML pipeline with Scikit-Learn. --- class: middle .center.width-70[] .footnote[Credits: vas3k, [Machine Learning for Everyone](https://vas3k.com/blog/machine_learning/), 2018.] --- class: middle .center.width-100[] --- class: middle, black-slide .center.width-80[] --- count: false class: black-slide, middle, center The end.